TFA - Transparent Live Code Offloading on FPGA

Using FPGAs to circumvent the physical and technological limitations in the design of computing devices is a recent phenomenon that is quickly gaining momentum. However, while providing the systems integrator with a compact and cost effective way to add advanced functionalities to products, this technological evolution has dramatically raised the overall complexity of systems: Exploiting new capabilities now requires a wide range of competencies that are rarely possessed by companies and institutions that need them. Due to the considerable effort demanded at development time, applicability is often limited to a reduced set of supported brands/models, while being effective only when the predicted usage patterns match the actual ones.

High-Level Synthesis (HLS) partially mitigates these problems by removing the language barrier. However, compiling and deploying a bit-stream is an extremely long process, and HLS development requires the establishment of a-priori usage patterns that might lead to sub-optimal usage of available hardware.

The TFA project [1], developed by the REDS Institute, adopts the same philosophy of both the VPE [2] and the HPA [3] projects, that is, dynamically adapt to the available data and computational resources while being transparent to the application developer. The solution we propose requires indeed no changes to the application code --- not even pragma indications to guide the optimization (although we can benefit from their presence) --- relieving the developer from the burden of being aware of the target platform's details. Moreover, she does not have to forecast use cases to prevent performance bottlenecks, nor does she have to statically decide which parts of the system have to be accelerated: The system transparently identifies parallelizable, computationally-intensive code fragments and dispatches them to a data-flow overlay engine (an enhanced version of the one presented in Capalija et al., 2013) pre-programmed on the FPGA. Since the bit-stream we use is fixed, and in contrast with HLS, we can alter the functionalities offered by the FPGA on-the-fly, to adapt them to current usage patterns. Finally, since we operate at the LLVM’s Intermediate Representation (IR) level, our approach is language-agnostic.

How does TFA work?

Figure 1 sketches the main components of the TFA system. At its heart lies a Just-In-Time (JIT) compiler, coupled with a low-overhead performance monitor to automatically detect which code fragments require the largest fraction of resources (namely execution time or memory accesses). The usage of a JIT framework is key to our approach. In addition to being able to perform optimizations that can only be applied at run-time, one can detect which parts of the code are actually taking the largest fraction of resources based on current inputs. With this information we can avoid off-loading unimportant code fragments that would result in little gain, while still retaining the power to revert these decisions should these fragments acquire more relevance. Once a code region is identified as critical, it is analyzed to expose parallelization opportunities. The Control Flow Graph (CFG) and the Data Flow Graph (DFG) are then extracted and used to drive the placement and routing of functional units over the overlay, which will be called Data Flow Engine (DFE) in the following. Finally, the FPGA’s overlay is reconfigured on- the-fly to execute the new data flow model. Once this is done (it requires few hundreds microseconds), we alter the execution flow of the code as in HPA and feed to the FPGA the data provided by the running application. An important characteristic of our proposal is that we are not tied to any specific hardware solution. We support all FPGAs, and the DFE we adopted has a parametric size to adapt to the available resources of different devices. Moreover, the chosen overlay itself is just a choice of convenience, as our framework architecture is generic.

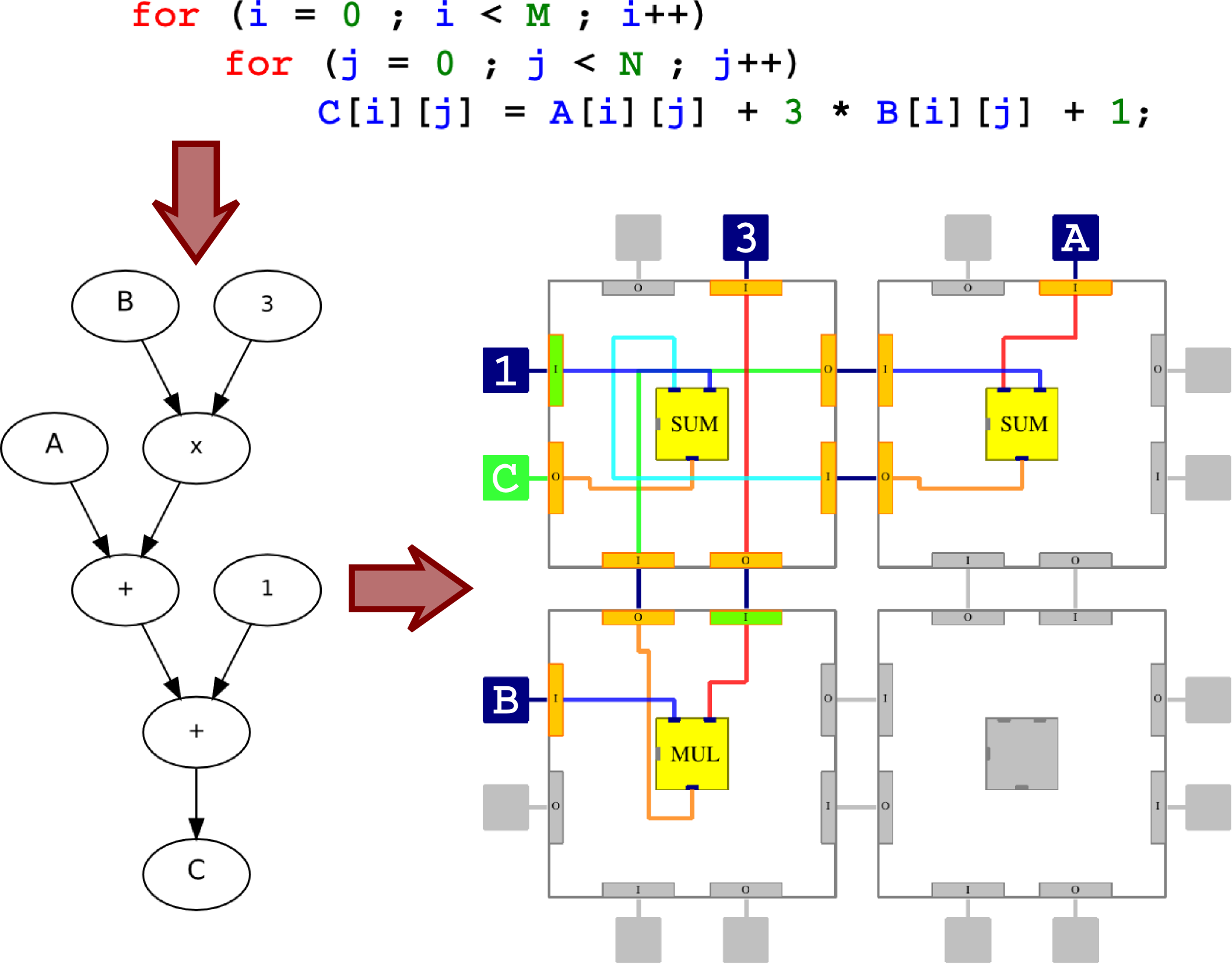

A graphical example of TFA in execution is shown in Figure 2: here a code fragment in first converted to the corresponding graph, and then a 2x2 overlay is properly configured to execute the operations.

Since the mapping of the graph to the overlay is a very complex problem (still unsolved in literature), we use a custom-made Las-Vegas-type algorithm that efficiently routes several dozens of nodes.

Future perspectives

Our long-term vision is to develop a framework that significantly reduces development time by allowing the code to be written just once, in a form most natural for the high-level developer, and then optimized on-the-fly only when needed and according to available hardware capabilities. This would be a considerable improvement over current HLS techniques and would significantly ease the transition towards more heterogeneous --- and significantly less energy-demanding --- forms of computing.

TFA @FPL2016

We have presented a demo of our work at the FPL2016 conference, receiving a very positive feed-back and encouraging comments!

Publications

[1] R. Rigamonti, B. Delporte, A. Convers, A. Dassatti, “Transparent Live Code Offloading on FPGA” - FSP 2016.

[2] B. Delporte, R. Rigamonti, A. Dassatti, “Toward Transparent Heterogeneous Systems” - MULTIPROG 2016.

[3] B. Delporte, R. Rigamonti, A. Dassatti, “HPA: An Opportunistic Approach to Embedded Energy Efficiency” - OPTIM 2016.