HPA

Heterogeneous Platform Accelerator: An opportunistic approach to embedded energy efficiency

- Responsable

- DASSATTI Alberto

- Période

-

October 2014 -

December 2015

-

Opening existing...

- Axes

-

Reducing energy consumption is a challenge that is faced on a daily basis by teams from the high-performance computing as well as the embedded domains. This issue is mostly approached from a hardware perspective by devising architectures that put energy efficiency as a primary target, often at the cost of processing power. Lately, computing platforms have become more and more heterogeneous, but the exploitation of these additional capabilities is so complex from the application developer’s perspective that their optimization margin is often limited.

The Heterogeneous Platform Accelerator (HPA) project, developed at

the REDS Institute. is the successor of the

Versatile Performance Enhancer (VPE) project. VPE introduced a technology aimed at easing the developer’s work by doing runtime profiling of code followed by automatic dispatching of the computationally intensive parts to external accelerators in a dynamic and transparent way. In particular, VPE succeeded in showing that large performance improvements could be achieved by dynamically off-loading portions of code to a DSP accelerator without requiring the developer any change in the code. Nonetheless, the lack of a suitable compiler’s backend for the chosen accelerator required the use of a set of scripts to compile Ahead-Of-Time the source code using a proprietary compiler.

HPA targets a different accelerator family — a GPU — for which a backend is available, and this allows us to compile on-the-fly the portions of code of interest. Moreover, in HPA we put a strong bias towards energy efficiency, investigating how the optimization impacts on the power dissipation, and we introduce an additional step in which computing-intensive functions are analyzed to detect the possibility and the opportunity of parallelizing them. Again, this is done automatically at run-time, thus requiring no effort from the developer and adapting to the current input data and load.

How does HPA work?

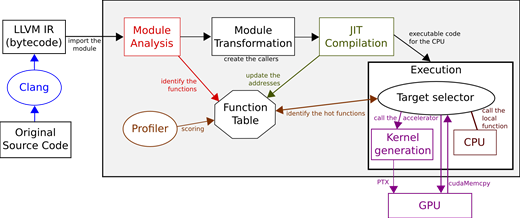

HPA is a framework, built around LLVM’s. Just-In-Time compiler, with components dealing with the different stages of the optimization process. Similarly to VPE, available code functions are detected in the Intermediate Representation (IR) at application startup, and function invocations are replaced with a caller that, when the function is not off-loaded, simply executes the desired function on the CPU via a function pointer.

Once a function is selected for off-loading, we alter the function pointer to make it point to the function ready to be executed on the GPU.

Detecting whether a function deserves off-loading on the GPU or not is a two-step process: at first, functions that perform heavy computations are identified by the perf event performance monitor. Perf event collects very detailed statistics about software and hardware counters, and allows to easily identify performance bottlenecks. In the context of this project we rely on the CPU usage alone to identify functions which might benefit from being off-loaded, but many more optimizations could be devised. After having obtained a sorted list of functions candidate for acceleration, we inspect each of them sequentially, checking with Polly whether it is parallelizable or not. Polly operates at the IR level too and starts by translating the code to optimize to a polyhedral representation, where it performs the parallelism detection and optimization. For the sake of simplicity, we operate at function level, off-loading entire functions to the accelerator. HPA is, however, independent with respect to the choice of the scale, so we could as well operate at the basic-block level — which could be interesting, for instance, for a multi-threaded function.

Once a function to off-load to the GPU has been selected, we generate on-the-fly the PTX code that is sent to the GPU by using the LLVM’s backend. Data are transferred to an accessible memory region using the dedicated CUDA API, and the execution is started. The results are finally transferred back once the computation is finished.

The architecture of HPA is depicted below: the input code undergoes an analysis step, during which functions are identified and callers for the potential candidates are created. The code is then executed by the JIT framework, and performances are monitored to identify optimization opportunities. Once a function deemed to be worth off-loading is detected, the corresponding PTX code is loaded — or generated by the LLVM’s back-end if this is the first function invocation — and the required data is transferred to the target device. The function is, at this point, ready for execution, and its subsequent invocations will run on the remote target unless the system detects that it is under-performing and thus chooses to revert its off-loading decision to leave the device available to other code blocks.

Demonstration ont the NVIDIA Jetson TK1 Board

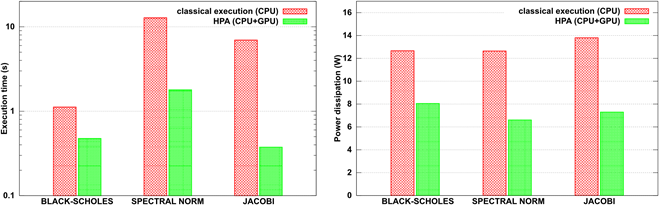

HPA has been tested using an NVIDIA Jetson TK1 board, which features a 4+1 ARM Cortex-A15 32-bit processor, running at 1GHz, with a 192-cores GK20A (Kepler) 852 MHz GPU.

Experiments have been performed on multiple datasets, revealing the execution on the combined CPU+GPU system to be a winning choice — both in execution time and power consumption terms — over its CPU-only counterpart. An example over three standard numerical codes (Black-Scholes algorithm model), Spectral Norm computation, and Jacobi iterative method) is shown in the figure below.

For additional experiments, including an overhead analysis, please refer to our paper [1].

For additional experiments, including an overhead analysis, please refer to our paper [1].

Applications and Perspectives

HPA allows the transparent off-loading of computational intensive fragments of easily-parallelizable code to a GPU accelerator, lowering the overall power consumption. As a side benefit, this significantly increases the performances, owing to both the parallel computing capabilities of the GPU and the reduced computational load of the main CPU. While HPA was developed as a proof-of-concept, it opens several interesting perspectives:

- Combined with VPE, platforms with a higher degree of heterogeneity can be targeted.

- Complex user-defined policies can be integrated to customize the energy profile of the application.

- Other performance metrics can be used, providing a closer match to user requirements.

Publications

[1] B. Delporte and R. Rigamonti and A. Dassatti. HPA: An Opportunistic Approach to Embedded Energy Efficiency . In OPTIM Workshop, International Conference on High Performance Computing & Simulation (

HPCS), 2016.