Heterogeneous architectures provide the system integrator a very compact and cost effective way of adding advanced functionalities to products by using specialized computational units.

Each computational unit offers a resource shaped for specific algorithms, computations and operations. While this heterogeneity provides a wide range of possible applications and an ever growing computational power, dealing with the variety of architectures and selecting proper targets is not trivial.

From the developer point of view, exploiting the available hardware requires a wide range of competencies which are not always in the background of any company or institution. Acquiring this experience and this know-how has a cost, both monetary and in terms of time. Consequently, the adoption of new technologies is limited in small to medium sized companies.

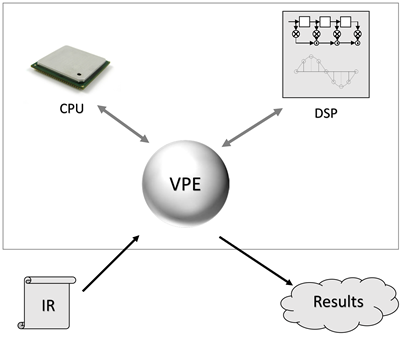

The VPE project, developed by the REDS Institute, is the successor of the SOSoC project. SOSoC consisted in providing a set a libraries specialized for each computational unit, coupled with a small and efficient runtime engine. The target selection had to be made by the developer manually. Now, VPE is going further by doing runtime profiling and dispatching the algorithms to the computational units in a dynamic and transparent way. VPE is a technology aimed to ease the developer’s work, automatically enhancing software execution performances.

Why VPE?

Nowadays, the computational power required by the applications is increasing. While the sequential execution of instructions has reached its limits, the parallelization is opening new perspectives. On the other hand, the idea of homogeneous symmetric multi processors (SMP), typical for servers and clusters, is being abandoned in favour of asymmetry.

Modern SoCs embed in a single chip many computing units : processors (CPU), co-processors, Digital Signal Processors (DSP), Graphics Processing Units (GPU), video accelerators, physically programmable cores (FPGA) and many other dedicated processing units.

Taking the best of each specialized unit needs adaptations and migrations of the existing code. To customize and adapt a specific product to such systems, the required effort has a high cost and takes time.

So, what can we do?

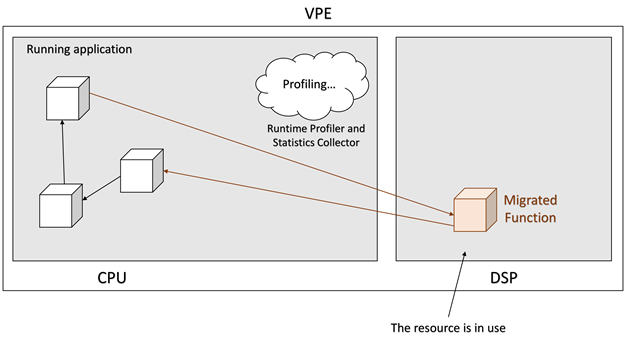

VPE offers a new technology which makes a software run on the available resources by providing, at runtime, a profiling mechanism and a dynamic computational unit selection. The programmer must not explicitly invoke any wrapper to let the system know on which functions and units to operate. Also, the programmer must not explicitly reimplement nor adapt the function to the computation unit of interest either.

VPE offers a new technology which makes a software run on the available resources by providing, at runtime, a profiling mechanism and a dynamic computational unit selection. The programmer must not explicitly invoke any wrapper to let the system know on which functions and units to operate. Also, the programmer must not explicitly reimplement nor adapt the function to the computation unit of interest either.

How does VPE work?

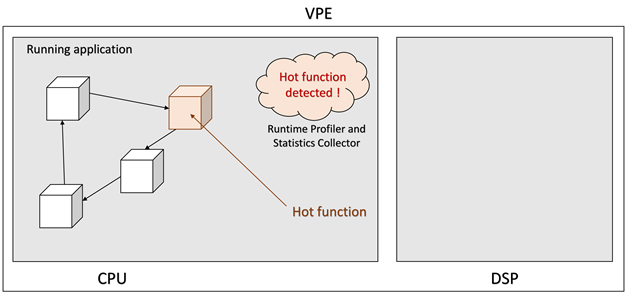

VPE collects advanced performance statistics at runtime. A function which requires high computational resources is automatically detected while the application is running. The most demanding functions, called “hot functions”, are selected and dynamically dispatched to the different available computation units.

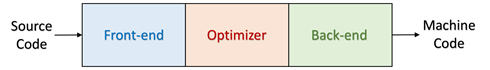

A classical compiler has three major components: the front-end, the optimizer and the back-end. The front-end parses the source code, checks it for errors and builds a language-specific representation. Then this representation is optionally converted to another one for optimization and the optimizer is run on the code. Finally, the back-end is run on the code and generates the executable machine code.

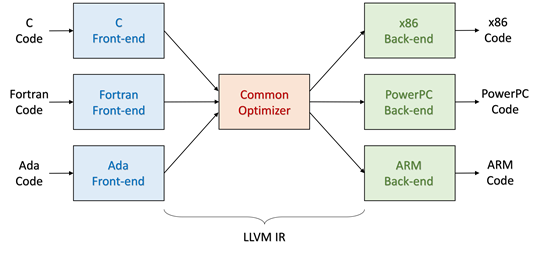

While the classical compiler is specific to a language, LLVM, a set of libraries used to build compilers, has a modular design and supports multiple source languages and target architectures. There are multiple front-ends which are specific to the input source code, for instance C, Fortran, or Ada. Then, a common intermediate representation (IR) is created and processed by a common optimizer. There are multiple back-ends which are specific to a target architecture, for example x86, PowerPC, or ARM. LLVM can do cross compilation.

The core of VPE is based on the Just-In-Time compilation technology (JIT) provided by LLVM, which performs compilation of code during the execution of the application.

Starting from the IR, which is obtained with LLVM’s compiler Clang, VPE dynamically adapts the code and makes it ready for the computational unit selection and the dispatching. The IR gets compiled in binary at runtime and is immediately executed. Only the used parts of the code are compiled and the code can be adapted to any target supported by LLVM. JIT technology has a considerable advantage: the code gets the benefits of any improvement of the underlying architecture and optimization, and can be recompiled on-the-fly.

When a hot function is identified, the computational unit switching process is transparent and does not require any user intervention.

Demonstration on the REPTAR board

VPE has been implemented on the REPTAR board for the demonstration prototype.

The REPTAR board is equipped with a Texas Instruments DM3730 SoC. The DM3730 includes an ARM Cortex-A8 core and a C64+ DSP. This SoC provides an heterogeneous architecture which can take benefit from VPE.

The starting point of the demonstration is a C code. The demonstration computes convolutions on a video. This video processing technique uses matrix products, typically involving additions and multiplications. Video decoding and video display are performed by the

OpenCV library.

At the beginning of the execution, the video processing is taking 100% of the Cortex-A8 core. All the functions are running on it.

After few seconds, VPE detects that the convolution function is the one which requires the highest computational power.

The hot function is selected and it is transparently migrated to the DSP. The data is shared between the computational units by using a shared memory.

It can be observed that the convolution is significantly accelerated by the DSP. As a result, the CPU usage falls down to 50% and the frame rate is higher.

The code running on the Cortex-A8 core and the DSP come from the same C code. No modification has been made on it.

Benchmarking on the REPTAR board

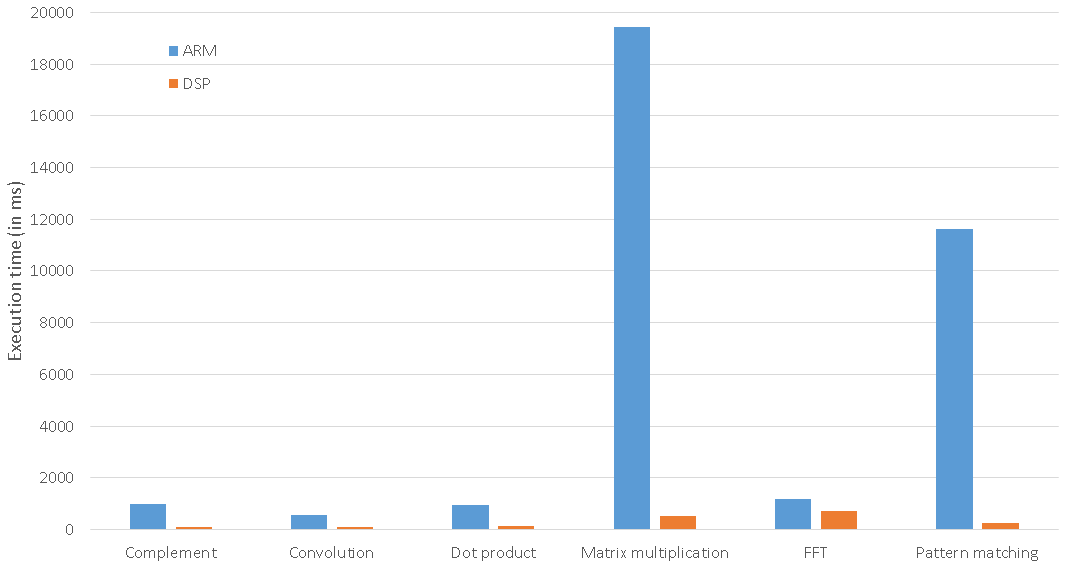

Several classical algorithms have been implemented in C and tested on VPE :

- Construction of the complementary nucleotidic sequence of a DNA sequence extract

- Convolution using a kernel matrix (used in the demonstrator)

- Dot product of 2 vectors

- Multiplication of 2 square matrices

- Fast Fourier Transform

- Pattern matching example : identification of a nucleotidic sequence in a DNA sequence extract

The matrix multiplication runs 37 times faster on the DSP than on the ARM core. The pattern matching algorithm runs 43 times faster on the DSP than on the ARM core.

Applications and perspectives

VPE offers a way to allow applications which have not necessarily been written for parallel or specialized computational units to benefit from them. This feature opens new perspectives:

- If functions are highly parallelizable, they can be sent to processing units with highly parallel architectures.

- Low power computational units can be selected for systems that require an advanced power control.

- The functions can be optimized and adapted to the selected computational units.

- The discovery of new computational units in the ecosystem can be automated.